Introduction

Rice is important because it gives us energy and nutrients. Moreover, it is a big part of our culture and celebrations. It helps farmers make a living and supports local economies. Since it is cheap and can be used in lots of different ways, many people rely on it for food. Rice shows where we come from and how we are connected to nature. Therefore, understanding the significance of rice quality helps us to recognize its role in our daily lives and in society.

Assessing rice quality is crucial for several reasons. It ensures the rice we eat is safe and nutritious, checking for essential nutrients and absence of harmful substances. Secondly, it guarantees the rice meets our taste preferences in appearance, texture, and cooking properties. Additionally, it influences market prices, supports farmers, and aids industries in producing consistent products. Proper assessment also helps in maintaining storage conditions and complying with food safety regulations, contributing to a reliable rice industry, and meeting consumer demands.

Rice grains and corn seeds classification using different image pre-processing techniques and machine learning algorithms such as K-Nearest Neighbor (K-NN), Artificial Neural Network (ANN), Support Vector Machine (SVM), etc., are discussed.1-14 These works also address different aspects for assessing rice grain such as detecting defects, varieties classification, quality grading etc. The accuracy achieved in the above literatures ranges from 39% to 100%. It is also observed that information related to dataset (training/testing) is not properly highlighted in most of the work. Classification of food grains using image processing techniques and Probabilistic Neural Network (PNN) is conducted with accuracies 96% and 100%.15-16 However, these works also highlights the tedious processes of arranging the grain kernels in a manner not to overlapped or touch each other. Classification of grain based on bulk grain image using color and texture features is also discussed.17 It is learnt that some of the tedious tasks for arranging the grain kernels in a non-touching pattern is not required in this work as the images are taken on bulk grain instead of single grain kernel. This work is able to achieve a classification accuracy of 90% using Back Propagation Neural Network (BPNN). Classification of different varieties of rice grain and plant seeds kernels using image processing and Convolution Neural Networks (CNN) is also presented.18-24 It is found that only few of the works have compared the performance to that of pre-train CNN models. Identification and classification of rice grain (on single kernel image) using different deep learning methods are also presented.25-26 The accuracy achieved in these work ranges from 84% to 99%. It is also observed that some of the classification tasks carried out in the literature were based on few numbers of class labels and therefore the task involve are not that challenging.

It is also observed from the literature that, manual identification and classification of grains is quite tedious, time consuming and the result is subjective in nature. Therefore, a robust classification process based on digital image processing and computer vision is becoming so important to overcome the above problems that are encountered in manual inspection process. It is learnt that classification using single grain kernel are not that easy as compared to classification on bulk grain images. Because, the former involves arranging the grain kernels in such a way that, they do not touch each other or overlap. It is also learnt that most of the classification task carried out in the last few years were based on extracting certain attributes or features from the input image. These processes of extracting features are quite time consuming and a prior knowledge about the image descriptors is necessary in-order to identify and extract the most dominating features. Further, it is also understood that too many features may jeopardize the overall classification process as there may be some redundant features involved in the process. Thus, a suitable feature extraction alone cannot guarantee for an accurate classification. Rather, there may be a need for filtering some of the redundant features. Therefore, techniques such as feature selection should go together with feature extraction to achieve better classification result. On the other hand, CNNs are expertly built with a specialized architecture that enables them to excel in recognizing patterns and features within images. In this study, different CNN and other classifiers are used to grade different varieties of rice grain. By comparing the performance of CNN models with other classifiers performances, we seek to determine the most effective approach for accurately categorizing bulk rice samples.

Materials and Methods

Data Collection

A comprehensive dataset was compiled, consisting of high-resolution images representing five different qualities of rice grains. Each quality of rice contains 100 images (a total of 500 samples for five grades of rice). The images were taken using digital camera in a controlled environment. The Camera details are as follows: Canon EOS 200D II; Lense = 50mm, f1.8; Focal length = 50mm; Distance from object = 25cm; Aperture range = f4.5; Shutter speed = 1/50; ISO = 400. The collected images is of 3840×2160×3 pixels in JPEG format. Figure 1 represents sample images (only 2) for each of 5 different qualities of rice.

|

Figure 1: Representation of sample images (only 2) for each of 5 different qualities rice. |

Texture Features Extraction

The GLCM (Gray-Level Co-Occurrence Matrix) method is utilized to capture the texture details of an object. It involves expressing the frequency of pixel pairs in a matrix along one direction. To expedite processing, input images are quantized to a grayscale of 64, necessitating a 64*64 GLCM. Pixel pairs are assessed for occurrence at angles of 0°, 45°, 90°, and 135°. The mean of the four GLCM matrices is computed across various angles, using Nine statistical properties namely, Mean, Variance, Range, Energy, Entropy, Contrast, Inverse Difference Moment, Homogeneity, Correlation. A sample of GLCM extracted features values using MATLAB software is shown in table 1.

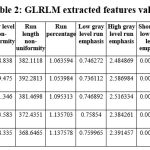

In GLRLM (Gray Level Run Length Matrix) analysis, a set of eleven distinct statistical properties is applied to delineate image textures. These properties encompass a variety of features crucial for texture characterization. This includes Run Percentage, Gray Level Non-Uniformity, and Short Run Emphasis. Alongside, Long Run Emphasis, Low Gray Level Run Emphasis, and High Gray Level Run Emphasis are also essential. Furthermore, Run Length Non-Uniformity, Short Run Low Gray Level Emphasis, Short Run High Gray Level Emphasis, Long Run Low Gray Level Emphasis, and Long Run High Gray Level Emphasis complete the set. Each property is integral in quantifying different facets of texture patterns, thereby offering thorough insights into the underlying texture characteristics of the image data in question. A sample of GLRLM extracted features values is shown in table 2.

Table 1: GLCM extracted features value

|

Rice quality |

Mean |

Variance |

Range |

Energy |

Entropy |

Contrast |

Inverse |

Homo |

Correlation |

|

Grade 1 |

28.06 |

0.780 |

0.002 |

0.3802 |

12.25 |

0.306 |

0.000 |

6.38 |

0.006 |

|

Grade |

31.54 |

0.704 |

0.003 |

0.36 |

12.10 |

0.293 |

0.000 |

7.49 |

0.007 |

|

Grade |

40.13 |

0.674 |

0.002 |

0.351 |

12.1 |

0.273 |

0.000 |

6.36 |

0.00 |

|

Grade |

35.58 |

0.661 |

0.003 |

0.35 |

12.20 |

0.280 |

0.000 |

7.18 |

0.007 |

|

Grade |

39.10 |

0.673 |

0.00 |

0.35 |

12.16 |

0.280 |

0.000 |

6.63 |

0.006 |

|

Table 2: GLRLM extracted features value |

Model Selection

In this study, we focus on grading five distinct varieties of rice based on their quality, employing a range of convolutional neural networks including Efficientnetb0, Googlenet, MobileNetV2, Resnet50, Resnet101, ShuffleNet and other classifiers namely, Linear Discriminant Analysis (LDA), K-nearest neighbor (K-NN), Naive Bayes (NB), Back Propagation Neural Network (BPNN) are used to carry out the classification task.

GoogLeNet

In the intricate design of GoogLeNet lies its signature: the inception modules. These modules are the heart of its ability to grasp details at different scales by employing filters of various sizes within a single layer. They collaborate seamlessly, producing a diverse spectrum of features. Complementing these are the reduction blocks, guiding the network to refine its understanding while expanding its capacity. With a composition of 22 layers, GoogLeNet strikes a delicate balance between computational efficiency and precision, blending convolutional, pooling, and fully connected layers harmoniously. With an image input size of 224×224 pixels, it transforms visual data into a canvas of labeled classifications, spanning a vast array of 1000 object categories.

ResNet-50

ResNet-50, an architectural marvel extending the lineage of ResNet-18, boldly strides into a realm of 50 layers, adorned with an array of augmented residual blocks. With each layer, it delves deeper into the intricacies of image understanding, sculpting a landscape rich in hierarchical complexity. Like its predecessor, ResNet-50 embraces the art of skip connections, orchestrating a symphony of gradient propagation to tame the elusive vanishing gradient. Within its core, identity mappings emerge as silent guardians, preserving the sanctity of information flow amidst the convolutional tumult. Comprising a mosaic of 50 layers, ResNet-50 intertwines residual blocks, convolutional intricacies, and the rhythmic cadence of batch normalization, crafting a tapestry of feature extraction prowess. At its heart lies a neural canvas, 224×224×3 pixels strong, endowed with the power to decipher images across a kaleidoscope of 1000 object realms.

EfficientNet-b0

EfficientNet-b0, a testament to streamlined architecture, finds its essence in the intricate dance of MBConv blocks. These blocks, a fusion of depth-wise separable convolutions and point-wise convolutions, form the backbone of efficiency, meticulously stacked to capture nuances across scales. Guided by a compound scaling methodology, EfficientNet-b0 harmonizes width, depth, and resolution, sculpting a model that balances prowess with practicality, ensuring computational efficiency without sacrificing accuracy. Within its layers lie the secrets of adaptability, where SE blocks dynamically recalibrate feature responses, enriching the model’s expressive power. With a symphony of 290 layers, weaving convolutional intricacies, batch normalization rhythms, and activation harmonies, EfficientNet-b0 emerges as a beacon of efficiency. Its neural canvas, 224×224×3 pixels vast, holds the promise of unraveling images across a myriad of 1000 object realms.

MobileNetV2

MobileNetV2, a specialized convolutional neural network architecture, is specifically crafted for mobile and embedded vision applications. With a typical input size of 224×224 pixels and three-color channels (RGB), it efficiently processes image data. The output varies depending on the task at hand, such as image classification, where it provides a probability distribution across different classes. Utilizing techniques like inverted residuals and linear bottlenecks, MobileNetV2 achieves effective feature extraction while maintaining a compact parameter count, distinguishing it from larger architectures like ResNet. This emphasis on efficiency makes MobileNetV2 well-suited for deployment in resource-constrained environments

ResNet101

ResNet101, a component of the ResNet (Residual Network) series, features a convolutional neural network architecture with 101 layers. Similar to other models in the series, it typically handles input images sized at 224×224 pixels with three color channels (RGB). The output size varies depending on the task; for instance, in image classification tasks, it yields a probability distribution across classes. To tackle the vanishing gradient problem, ResNet101 employs residual connections, facilitating the training of exceptionally deep networks.

ShuffleNet

ShuffleNet is designed to achieve high performance with low computational cost, particularly for mobile and edge devices. It typically accepts input images of size 224×224 pixels with three color channels (RGB), similar to other models. The output size varies depending on the specific task, such as image classification. ShuffleNet’s architecture introduces channel shuffling operations to enable efficient cross-group information flow while keeping computational overhead low. Compared to ResNet and other large architectures, ShuffleNet generally has fewer parameters, making it suitable for resource-constrained environments such as mobile devices.

K-Nearest Neighbors (KNN)

Stands as a fundamental yet potent classification technique. Its essence lies in discerning a data point’s class label by scrutinizing the predominant class among its k closest neighbors in the feature space. Unlike many counterparts, KNN dispenses with a dedicated training phase; instead, it archives all training instances and their associated labels for direct comparison during inference. Nonetheless, its simplicity belies computational demands, particularly evident with expansive datasets, due to the imperative of computing distances between the query instance and all instances in the training set. Furthermore, the choice of the k parameter wields considerable influence over the algorithm’s efficacy and its adaptability to novel data.

Linear Discriminant Analysis (LDA)

Serves as a generative model designed to pinpoint a linear blend of features that maximizes class separation. By independently modeling feature distributions within each class and leveraging Bayes’ theorem, LDA estimates the likelihood of each class given the observed features. Its assumptions entail normal distribution of features within each class and uniform covariance matrices across classes. LDA boasts interpretability and efficiency, particularly with high-dimensional data, yet it remains sensitive to assumption violations, potentially undermining its performance.

Naive Bayes (NB)

Naive Bayes (NB) emerges as a probabilistic classifier grounded in Bayes’ theorem, albeit with the “naive” assumption of feature independence conditioned on the class. It gauges class priors and conditional feature probabilities from training data. NB’s computational efficiency shines in tasks like text classification with sparse, high-dimensional data. However, its simplistic independence assumptions may overlook real-world intricacies, occasionally yielding suboptimal outcomes.

Backpropagation Neural Network (BPNN)

Backpropagation Neural Network (BPNN) represents an artificial neural network variant that learns from labeled training data by iteratively adjusting weights via forward and backward error propagation. Comprising multiple interconnected layers of nodes, BPNNs employ nonlinear activation functions to capture complex patterns. While adept at discerning intricate nonlinear relationships in data, BPNNs demand ample labeled data and computational resources for training, rendering them versatile yet resource-intensive across various tasks.

Training Phase

For CNN, training phase is implemented by fine-tuning the selected pre-trained models on the rice dataset. The last few layers of the CNN architectures were retrained to adapt the models to the specific characteristics of the rice images. 10% of the dataset was used to facilitate model training. The training process involved feeding the images through the CNNs, adjusting the model’s parameters based on the calculated loss, and iteratively optimizing its performance. The used algorithm in MATLAB is shown below:

Define the file path to the dataset.

Load dataset using image data store with specified folder structure for labels.

Load the pre-trained network and define network layers (with modifications).

Define training options for the network. (‘sgdm’, ‘Maxepoch’, 10, ‘InitialLearnRate’, 0.01,’Validation Data’, tdata,’ValidationFrequency’,1,).

Train the network using 10% training data and defined options.

Test the trained models on 100% dataset:

Predict classes for testing images.

Display images with predicted classes.

Calculate accuracy, Plot confusion matrix to evaluate model performance on testing data.

For other Classifier, first we have extracted different features value using GLCM and GLRLM method then by using 10% of these values, we trained the classifiers. The used algorithm is shown below:

Import the necessary libraries and packages for image processing, feature extraction (GLCM and GLRLM), and classification.

Load the dataset containing images of different grades of rice, ensuring balanced representation across classes.

For each image in the dataset:

Extract texture features using GLCM and GLRLM.

Store the extracted features along with their corresponding class labels.

Train the classifiers using the 10% extracted texture features and their respective class labels.

Test the classifiers with 100% dataset.

Results

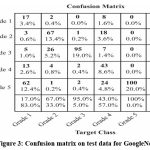

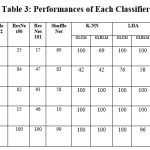

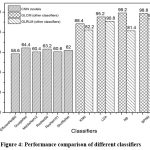

Quality grading of five different types of rice grain is performed in MATLAB environment, where 10 % of the total rice grain images (or features, in case of classifiers other than CNN) are used for training the classifiers. A sample of training progress plot and confusion matrix for GoogleNet (best performing classifier among six CNN considered in this work) is shown in figure 2 and 3 respectively. For testing purpose, 100% of total data is used to analyze the classifiers performance (i.e. 500 images for five different varieties of rice). The average classification accuracies achieved for all six CNNs are as follows, 58.6% for Efficientnetb0, 64.4% for GoogleNet, 60.4% for MobileNetV2, 63.2% for Resnet50, 60.6% for Resnet101 and 62.0% for ShuffleNet. It is observed that, the performance of rice grading based on other classifiers using GLCM features are 88.4%, 95.2%, 99.2%, and 98.8% respectively for K-NN, LDA, NB, and BPNN. Similarly, for GLRM based texture features, the average classification accuracies are 82.2%, 90.8%, 81.4% and 93.4% respectively. Results show that, grading of rice using texture features outperforms the six CNNs classifiers considered in this work. Results also show that GLCM based texture features are found more suitable for grading the five different types of rice presented in this work with average classification accuracy of 99.2 %.

|

Figure 2: Training progress graph for GoogleNet |

|

Figure 3: Confusion matrix on test data for GoogleNet |

Performances of each classifier towards grading of rice are shown in table 3. This determines how classifiers performed to detect different grade of rice. As stated above 500 images are used for testing the classifier. Table 3 indicates how much images out of 100 are predicted correctly. The overall comparison between CNN models and other classifier is shown in figure 4.

|

Table 3: Performances of Each Classifier |

Figure 4: Performance comparison of different classifiers

|

Figure 4: Performance comparison of different classifiers |

Discussion

Quality grading of five different types of rice grain based on bulk image is carried out using different classifiers in MATLAB platform and their performance are compared. In order to ease the classification process bulk grain are used instead of single grain kernel. Otherwise, the grading process will also involve various image preprocessing steps. Classification and grading of grain using CNN does not involve tedious task like feature extraction and selection, as it is taken care by the convolution layers and max pooling layers. However, the time consumption is more in the case of CNN during training due to large numbers of network layers. This study aims to compare the performance of the CNN to that of the conventional way of image grading and classification using engineered features. This work considered the two most widely used texture feature extraction techniques namely GLCM and GLRLM for the classification task. It is also learnt from the literature that most of the pattern recognition task are application specific. Results show that grading of rice grain using CNNs considered in this work is found less suitable for the task. This study suggests that classification and grading of rice grain using engineered features (texture features in our case) yield better results as human intelligence is involved during feature extraction process. It is also observed that GLCM based texture feature extraction can provide better results as compared to GLRLM based texture features. It is also learnt that BPNN classifier is able to provide better results for both the texture features considered in this work.

Conclusion

Quality grading of five different types of rice grain is carried out using ten different classifiers. This study focuses on comparing the performance of six different pretrained convolution neural networks to that of other conventional classifiers using texture feature. This study observes that, out of all the six CNNs, Googlenet is able to give highest accuracy of 64.40%. However, it is also observed that, rice grading using engineered texture features is able to yield better results as compared to CNNs. Grading of rice using GLCM is slightly better (accuracy of 99.20%) as compared to that of using GLRLM (93.40%). Results also suggest that, BPNN classifier is able to perform the task with better consistency as compared to other conventional classifiers considered in this work. Thus, this study shows that, grading of rice grain using engineered features outperforms other CNN based classifiers with inbuilt feature extraction in the convolution layers.

Acknowledgement

The successful completion of this study is due to the cooperation of respected instructors and colleagues, who offered priceless advice at every stage. For his invaluable support and encouragement during this entire endeavor, I am extremely grateful to Mr. Ksh Robert Singh, Assistant Professor, Department of Electrical Engineering, Mizoram University.

Funding Sources

The author(s) received no financial support for the research, authorship, and/or publication of this article.

Conflict of Interest

The authors do not have any conflict of interest.

Data Availability Statement

This statement does not apply to this article.

Ethics Statement

This research did not involve human participants, animal, subjects, or any material that requires The ethical approval.

Informed Consent Statement

This study did not involve human participants, and therefore, informed consent was not required.

Authors’ Contribution

1st Author (Nilutpal Phukon)

Carried out the work using different pre-trained convolution neural networks.

2nd Author (Ksh. Robert Singh)

Performs texture feature extraction (using GLCM and GLRLM) and also organized the whole manuscript.

3rd Author (Sonamani singh T.)

Carried out the literature survey of this work.

4th and 5th Authors (Subir Datta and Subhasish Deb)

Contribute towards collecting five different grades of rice and image acquisition task.

6th and 7th Authors (Robinchandra Singh U. and Ghaneshwori Thingbaijam)

Carried out the classification and grading of rice grain using different conventional classifiers other than CNNs.

References

- Kiratiratanapruk K., Sinthupinyo W. Color and texture for corn seed classification by machine vision. Intelligent Signal Processing and Communications Systems: International Symposium; 7-9 December 2011; Chiang Mai, Thailand.

CrossRef - Ronge R. V., Sardeshmukh M. M. Comparative analysis of Indian wheat seed classification. Advances in Computing, Communications and Informatics: International Conference; 24-27 September 2014; Delhi,

CrossRef - Yi X., Eramian M., Wang R., Neufeld E. Identification of Morphologically Similar Seeds Using Multi-kernel Learning. Computer and Robot Vision : Canadian Conference; 6-9 May 2014; Montreal, QC,

CrossRef - Cinar I., Koklu M. Classification of Rice Varieties Using Artificial Intelligence Methods. Int jour of Intell Syst Appl Engg. 2019; 7(3): 188–194.

CrossRef - Mahajan S., Kaur S. Quality Analysis of Indian Basmati Rice Grains using Top-Hat Transformation. Int Jour of Comp App.2014; 94(15): 42-48.

CrossRef - Sharma D., Sawant S. D. Grain quality detection by using image processing for public distribution. Intelligent Computing and Control Systems: International Conference; 15-16 June 2017; Madurai, India.

CrossRef - Akash T., Rahul V., Prakash G., Rashmi A. Grading and Quality analysis of rice and grains using digital image processing. Int jour of Engg Tech and Manag Scs. 2022; 6(4): 280-284.

CrossRef - Basavaraj S., Anami l., Dayanand G., Savakar. Effect of Foreign Bodies on Recognition and Classification of Bulk Food Grains Image Samples. Jour of Appl Comp Sc. 2009; 6 (3): 77-83.

- Nagoda N., Lochandaka R. Rice Sample Segmentation and Classification Using Image Processing and Support Vector Machine. Industrial and Information Systems: International Conference; 1-2 December 2018; Rupnagar, India.

CrossRef - Wah T. N., San P. E., Hlaing T. Analysis on Feature Extraction and Classification of Rice Kernels for Myanmar Rice Using Image Processing Techniques. Int Jour of Sci and Res Publ. 2018; 8(8): 603-606.

CrossRef - Kaur H., Singh B. Classification and grading rice using multi-Class SVM. Int Jour of Sci and Res Publ. 2013; 3(4): 1-5.

- Ibrahim S., Zulkifli N. A., Sabri N., Shari A. A., Mohd Noordin M. R. Rice grain classification using multi-class support vector machine (SVM). IAES Int Jour of Arti Intel. 2019; 8(3): 215-220.

CrossRef - Silva C. S., Sonnadara U. Classification of Rice Grains Using Neural Networks. Agriculture and food science: Technical Sessions; 29 March 2013; Kelaniya, Sri Lanka.

- Arora B., Bhagat N., Saritha L. R., Arcot S. Rice Grain Classification using Image Processing & Machine Learning Techniques. Inventive Computation Technologies: International Conference; 26-28 February 2020; Coimbatore, India.

CrossRef - Nayana KB., Geetha CK. Quality Testing of Food Grains Using Image Processing and Neural Network. Int Jour for Res in Appl Sc and Engg Tech. 2022; 10(7): 1163-1175.

CrossRef - Visen N. S., Paliwal J., Jayas D. S., White N. D. G. Image analysis of bulk grain samples using neural networks. Canad Biosys Engg. 2004; 46: 7.11-7.15.

- Keya M., Majumdar B., Islam Md. S. A Robust Deep Learning Segmentation and Identification Approach of Different Bangladeshi Plant Seeds Using CNN. Computing, Communication and Networking Technologies: International Conference; 1-3 July 2020; Kharagpur, India.

CrossRef - Prakash N., Rajakumar R., Madhuri N. L., Jyothi M., Bai A. Pa., Manjunath M., Gowthami K. Image Classification for Rice varieties using Deep Learning Models. YMER Jour. 2022; 21(6): 261-275.

CrossRef - Hiremath S. K., Suresh S., Kale S., Ranjana R., Suma K. V., Nethra N. Seed Segregation using Deep Learning. Grace Hopper Celebration India: International Conference; 6-8 November 2019; Bangalore, India.

CrossRef - Gilanie G., Nasir N., Bajwa U., Ullah H. RiceNet: convolutional neural networks-based model to classify Pakistani grown rice seed types. Multim Syst. 2021; 27: 867–875.

CrossRef - Yonis G., Hamid Y., Soomro A. B., Alwan A. A., Journaux L. A Convolution Neural Network-Based Seed Classification System. MDPI Jour of Sym. 2020; 12(12): 1-18.

CrossRef - Hong Son N., Thai-Nghe N. Deep Learning for Rice Quality Classification. Advanced Computing and Applications: International Conference; 26-28 November 2019; Nha Trang, Vietnam.

CrossRef - Koklu M., Cinar I., Taspinar Y.S. Classification of rice varieties with deep learning methods. Comp and Electr in Agri. 2021; : 1-8.