Introduction

Overview

With a huge contribution of approximately 18% to the GDP, agriculture has established itself as one of the most prominent sectors of the nation. Some significant challenges like unpredictable climatic conditions, continuously decreasing level of groundwater and intrusion of stray animals in farmlands are giving tough time to the farmers around the globe. The factors like unpredictable weather and shortage of rainfall may be termed as uncontrollable but the problems like animal intrusion can be controlled with the help of conventional methods as well as technological solutions1. Around 30,000 instances of crop damage due to animal intrusion are being reported in India every year resulting in economical loss as well as loss of lives due to human-animal conflict. Conventional methods like fences, trenches etc are still commonly used to check animal raids but they have not only proved to be ineffective in controlling animal entry in agriculture lands but also result in injuries to animals. Therefore a deep learning based object detection method has been proposed in this paper for timely detection of stray animals and instantly alerting the farmer about the intrusion so as to prepare him for timely and appropriate action. The farmers may take safety precaution in case any dangerous animal has entered the fields which can help in saving farmers’ lives along with controlling damage to crops.

Conventional Methods

The farmers have been using conventional practices like trenches, fences etc to check animal intrusions in farmlands but such practices have proved to be ineffective for this purpose. Animals can enter the fields by jumping across them or tunnelling under them. Other traditional methods like scarecrows etc may not be successful in scaring of all types of animals and birds2. The major loophole in such methods is their failure in giving real time information to farmers. In case of a security breach by an animal in fields, the farmer may get to know about it after hours or even after days.

IoT Based Solution

Nowadays application of IoT networks has become inseparable part of various fields like defence, medical etc. The capability of these IoT devices to monitor the surroundings, gather information and transmit the gathered information to the appropriate destination has made it the most widely used technology these days3. IoT networks are very reliable and efficient network that support in transferring information in real time environments where high speed is required4.

|

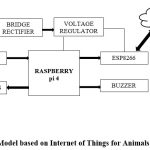

Figure 1: Model based on Internet of Things for Animals Detection5 |

A large number of small sensors collectively constitute an IoT network that is widely utilised for monitoring and real time data transfer. As depicted in Fig 1,a 12- Volt voltage source is required along with a camera to monitor the surroundings and collect information about the required parameters from the environment. The gathered information gets processed by storing this information on cloud for further processing. This IoT cloud serves as a storage device which is used to save the gathered information. The entire process is performed by an Arduino based or Raspberry pi setup. IoT networks can be beneficial for farmers to save their crops from entry of stray animals in agricultural lands by training the model to detect the entry of animals in the field, identifying the animal in the frame and alerting the farmer by transmitting information about the potential threat 6,7.

Image and Video Processing Technique for Detection of Animal

Methods based on image processing present and effective and efficient strategy to detect the animals entering the farms. No additional storage is required in such methods because the processing of data is done in real time8,9. The detection model, which is specifically trained for detecting and identifying the animals entering the fields, processes the video frames captured by the cameras installed in the agricultural lands10. One of the most effective and innovative approach, You Only Look Once (YOLO ), is an object detection technique based on Deep Neural Network (DNN). It is an object detection technique which can detect the objects present in an image by marking b-box (Bounding box) around the object. The most popular version of YOLO i.e. YOLOv5 (You Only Look Once version 5) is the most popular version of YOLO for detection and classification of objects11.The IoT network is responsible for detection of an object whereas prediction of accuracy of the detection along with the predicted object done by the prediction model. The accuracy of prediction of the detected object relies heavily on the network training as network training is the most crucial part of a detection model12.

Moreover, requirement of ICT resources like a stable Internet connection and expensive hardware setup like Graphical Processing Unit (GPU) can create hindrance in implementation of such model due to cost constraint as initial cost of setting up and maintaining such system can be quite high and give rise to a significant concern for the agriculturists.

Comparison of YOLO with other Object Detection Algorithms

There are various image detection algorithms like SSD (Single Shot Detector), RCNN (Region Based Convolutional Neural Network) and YOLO (You Only Look Once) for detection of objects in an image or a video. SSD facilitates detection by adding layers of different features in the end network13. The RCNN model uses CNN method for detection of an object14. Regression problem is used by YOLO for end-to-end target detection15. As far as smaller objects are concerned, the accuracy of SSD is very low than Faster R-CNN. SSD needs huge data for training which can be exhausting financially and it will also consume a lot of time . This algorithm’s precision comes at the expense of temporal complexity. In spite of advancements in RCNN and Fast RCNN, numerous passes are necessary for a single image, in contrast to YOLO.

Materials and Methods

Agriculture is one of the most significant contributors in economies of different countries, especially the developing nations. One of the most crucial issues faced in this field is intrusion of stray animals in the farmlands which results in crop damage and injuries to both human and animals due to the conflict. Therefore the dire need of the moment is to develop a system which can protect both animals and farmers from any sort of economical or physical damage.

A method has been proposed in this paper to provide an effective and affordable technological solution for real time detection and tracking of animal entering the fields by integrating YOLOv5 with IoT technology to send timely alert to the farmer informing him to take the appropriate action. The proposed methodology is organized into four main stages: pre processing of the data, conversion to YOLO format, application of our modified YOLOv5 model for detection and refined DeepSORT

algorithm for tracking. The first step is to pre-process the images. The pre-processing comprises of various steps like resizing images to a consistent size and normalizing the image pixel values. Augmentation techniques such as rotations, translations, and flips are applied to increase the diversity of the data set. The data should be converted into a format compatible with YOLO, known as the YOLO format in order to use the data in this model. The format is in the form of annotations using normalized bounding box coordinates along with the class label. Then the modifications in the architecture of the YOLOv5 model have been introduced to fine-tune it to detect a diverse set of animal species. The modifications include varying distribution of layers and filter sizes, along with the adjustment of parameters like learning rate and weight decay. The refined DeepSORT approach incorporates modifications that can handle tracking challenges like occlusions and similar appearances in animal species. We employed two datasets: the Animal-80 dataset for images and animal videos from ImageNet for testing and training of this model. Animal-80 dataset comprises of images of eighty diverse species of animals found in different regions where as Imagenet consists of videos of animals mainly focussing on object detection.

Rapid advancements in technology in the last two decades, especially in the field of computer vision, have offered a wide range of solutions to age-old issues. The deep learning models such as YOLO have brought a revolutionary change in the field of computer vision. Furthermore significant improvements have been observed in real time information transfer by advent of IoT which has made it possible to interconnect diverse components to facilitate transmission of information. A promising solution to the issue of crop damage by stray animals can be offered by integrating deep learning models with IoT.

This model has been designed by integrating video cameras that capture videos in real time,YOLOv5 which processes the captured video in order to detect and track the animal and IoT devices which notify the farmer about the intrusion. The detailed procedure for animal detection and tracking has been outlined below:

Video Data Capturing

Strategical positioning of cameras, specifically close to prospective entry points of animals, is the first step in implementation of this method.

Cameras with night vision features should be preferred as many animals ,particularly wild animals, intrude the farms at night.

The cameras should be selected keeping in mind different parameters like size of the farm, animal species located in that area and financial constraints of the farmer. Detection accuracy can be improved by installing high resolution cameras.

Detection of Animal using YOLOv5

YOLO(You Only Look Once )is a path breaking object detection algorithm which has many versions but its most popular version which is known for its detection accuracy and speed is YOLOv5.

Each frame in the video is processed by YOLOv5.Upon detection of an animal, the movement of the animal is continuously tracked until the animal vanishes from the range of the camera.

YOLOv5 is capable of processing images at a very fast speed thus it is an ideal alternate for real time detection. This model is trained on a diverse data set having images of various animals likely to intrude the agricultural land. The detection accuracy of the system can be improved further by fine-tuning it using local data at regular intervals.

The model is capable of continually tracking the animal even if it stops or changes its direction.

Instant Alert through IoT Integration

A signal is transmitted to an IoT device on detection of an animal by YOLOv5.

The responsibility of alerting the farmer about the entry of an animal lies with IoT device which can notify it by sending an SMS or through automated call.

The IoT setup requires stable internet connection in order to ensure that the farmer gets notified immediately about the intrusion irrespective of his location.

The alert not only notifies the farmer about the intrusion but also gives information about its type and its location. Such information assists the farmer in taking a decision about the appropriate action to be taken.

Operational Overflow

After installation of the model the cameras keep feeding the video data to the detection model continuously.

This video data is processed by YOLOv5 to detect the present of stray animal in the fields. Upon detection of an animal, the system keeps monitoring the movement of the animal until it vanishes from the camera range.

In case of a confirmed detection, an alert is sent to the farmer by IoT device that enables the farmer to take appropriate and instant action to prevent the crop damage.

System Maintenance and Upgrades

The cameras and IoT devices require continuous maintenance for working efficiently in long term.

The detection model needs to be retained periodically, specifically on detection of new animals species in the fields or if the accuracy of the system starts declining.

IoT devices also need to be upgraded at regular intervals to make sure that they are compatible with network configuration and device at the receiving end.

Results

The class wise performance of the proposed model is given in Table 1. The sample table, which detailed detection accuracy for animals like elephants, bear, goat, tiger etc, is particularly insightful. Majority of the detections showcased an impressive accuracy above 95%. After relating the metrics to object detection we can observe that the model excels at making accurate detections, thereby minimizing false alarms thereby showing high precision. Also the model successfully identifies all objects present in the image or video, ensuring no object is overlooked.

Table 1: Class wise performance of proposed model

| Class | Images | Instances | Precision | Recall | mAP50 | mAP50-95 |

| Bear | 6003 | 42 | 0.980 | 0.985 | 0.975 | 0.970 |

| Brown bear | 6003 | 44 | 0.975 | 0.975 | 0.980 | 0.965 |

| Bull | 6003 | 22 | 0.970 | 0.985 | 0.975 | 0.965 |

| Cheetah | 6003 | 17 | 0.970 | 0.990 | 0.985 | 0.975 |

| Deer | 6003 | 235 | 0.975 | 0.980 | 0.980 | 0.970 |

| Elephant | 6003 | 107 | 0.985 | 0.990 | 0.990 | 0.980 |

| Goat | 6003 | 64 | 0.980 | 0.985 | 0.985 | 0.975 |

| Tiger | 6003 | 36 | 0.980 | 0.985 | 0.980 | 0.970 |

| Turtle | 6003 | 22 | 0.980 | 0.985 | 0.980 | 0.970 |

Discussion

The results clearly exhibit the effectiveness of this modified YOLOv5 model in detecting various animal species accurately in different scenarios and environmental conditions. It is noteworthy how the model succeeds in capturing even small animals and those at a distance, demonstrating its robustness and high sensitivity. The application of the refined DeepSORT for tracking shows remarkable results. The unique identification assigned to each animal is consistent across different frames, illustrating the stability of the tracking algorithm. This is crucial in scenarios where monitoring animal movement over time is important. The alert system, integrated with the detection model, brings practical value to the entire pipeline. By sending real-time alerts upon the detection of potential threats, the system ensures prompt action, potentially preventing crop damage and improving farm safety. Overall, these results indicate that the combination of the adapted YOLOv5 and the refined DeepSORT, along with the real-time alert system, provides a robust and efficient solution for animal detection and tracking in farms. However it is worth mentioning here that in the case of developing nations there is lack of facilities like fast internet, availability of hardware etc thus these limitations may affect the practical implementation of this model in remote and rural areas in developing nations. The use of Low Power Wide Area Networks (LPWAN) technologies like Zigbee, that are suitable for long-range communication and require minimal bandwidth, may be used in rural settings to resolve the issue of internet in such areas. In order to address the concern of hardware availability, cost-effective alternatives like low-cost, open-source hardware for sensors and cameras can be explored in future. One of the significant challenges is existence of diverse animal species in different regions which requires rigorous training of the model. Potential adaptations to the YOLOv5 model , such as fine-tuning the model with region-specific training data to improve accuracy could be made to address this challenge. Moreover multi-modal data (e.g., sound detection or thermal cameras) can be incorporates for more accurate detection of species in challenging environments.

The comparison of the present work with other existing models has been shown in Table 2. It can be observed from this table that the current model performs better than existing models using innovative concepts such as Internet of Things (IoT), Deep Learning etc in terms of accuracy.

Table 2: Comparison of present work with existing models

| Title | Year | Objective | Accuracy |

| Application of IoT and machine learning in crop protection against animal intrusion 5 | 2021 | The main objective of this work is to check destruction of crops by intrusion of animals by utilizing two emerging fields of Information Technologies i.e. Internet of Things and machine learning. |

80 % |

| BiLSTM-based individual cattle identification for automated precision livestock farming 16 | 2023 | The author developed a deep learning based model for monitoring cattle in livestock farming. | 94.7% |

| Present Work | 2023 | The problem of animal detection and tracking in agricultural settings has been addressed using a combination of machine learning techniques and alert systems |

96% |

Conclusion

Rapid technological advancements have led to development of innovative solutions in the field of agriculture. Another major challenge being faced by the farmers persistently is the damage of crops resulting into economical loss by animal intrusion in the agricultural lands. In conclusion, the present work demonstrates the significant potential of machine learning algorithms, particularly the enhanced YOLOv5 and refined DeepSORT, in the realm of animal detection and tracking in agricultural domains. This work is an attempt to design and develop a seamlessly integrated solution that is both robust and manufacturable. The main aim of this system is accurate identification of intruding animal from the video fed by the video cameras, continuous tracking of the animal movements and sending timely alert to the farmer giving information regarding intrusion. The proposed model is effective in assisting farmers to respond promptly by taking appropriate action. The system has exhibited remarkable accuracy when tested on diverse species of animals. The proposed model, trained on a diverse array of image and video datasets, demonstrates impressive performance, reflected by an accuracy rate above 96%. This study illuminates how this integrated alert system can be effectively utilized to warn farmers about animal presence in real-time, proving instrumental in safeguarding both farmers and their crops. In brief an effective, pathbreaking and comprehensive solution to handle animal intrusion has been proposed by integration of YOLOv5 for detection with IoT to send alert. In future, further enhancements can be done to the model, including increasing the variety of species it can detect and refining the alert system for faster and more efficient notifications. The research reaffirms the transformative role that advanced technologies can play in agriculture, paving the way for smarter and safer farming practices. Future work could focus on improving the robustness of the AI models to various conditions such as low light, occlusion, or varying weather conditions. This would ensure accurate animal tracking irrespective of the environmental context. This model may also be installed on edge devices and can be modified for using it for other commercial purposes, like forest management, zoo management etc. As then new version of YOLO i.e. YOLOv8 has been introduced, this model can be implemented using YOLOv8 in future.

Acknowledgement

The author would like to thank Dr Deepam Goyal, Chitkara University for constant guidance and support.

Funding Sources

The author(s) received no financial support for the research, authorship, and/or publication of this article.

Conflict of Interest

The authors do not have any conflict of interest.

Data Availability Statement

The manuscript incorporated the datasets used throughout the research study.

Ethics Statement

This research did not involve human participants, animal subjects, or any material that requires ethical approval.

Author Contributions

The sole author was responsible for the conceptualization, methodology, data collection, analysis, writing, and final approval of the manuscript

References

- Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2016:779-788.

CrossRef - Lopez B, Martinez F. Traditional agricultural practices: An assessment of effectiveness. Agric Hist Rev. 2018;66(1):45-58.

- Smith C. Internet of Things in modern agriculture: Opportunities and challenges. Agric Inform. 2020;7(2):110-123.

- Chitra K. Animals detection system in the farm area using IoT. In: 2023 International Conference on Computer Communication and Informatics (ICCCI); 2023:1-6. doi:10.1109/ICCCI56745.2023. 10128557.

CrossRef - Balakrishna K, Mohammed F, Ullas CR, Hema CM, Sonakshi SK. Application of IoT and machine learning in crop protection against animal intrusion. Glob Transitions Proc. 2021;2(2):169-174. doi:10.1016/j.gltp.2021.08.061.

CrossRef - Farooq MS, Riaz S, Abid A, Abid K, Naeem MA. New technologies for Smart Farming 4.0: Research challenges and opportunities. 2019;7.

CrossRef - Bhuvaneshwari C, Manjunathan A. Advanced gesture recognition system using long-term recurrent convolution network. Mater Today Proc. 2020;21:731-733.

CrossRef - Wild TA, van Schalkwyk L, Viljoen P, et al. A multi-species evaluation of digital wildlife monitoring using the Sigfox IoT network. Anim Biotelemetry. 2023;11(1):1-17. doi:10.1186/S40317-023-00326-1/TABLES/3.

CrossRef - Surya T, Chitra Selvi S, Selvaperumal S. The IoT-based real-time image processing for animal recognition and classification using deep convolutional neural network (DCNN). Microprocess Microsyst. 2022;95:104693. doi:10.1016/j.micpro.2022.104693.

CrossRef - Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2016:779-788.

CrossRef - Dai J, Li Y, He K, Sun J. R-FCN: Object detection via region-based fully convolutional networks. Adv Neural Inf Process Syst. 2016.

- Liu W, Wang Z, Liu X. A survey of deep neural network architectures and their applications. Neurocomputing. 2017;234:11-26. doi:10.1016/J.NEUCOM.2016.12.0

CrossRef - Ren S, He K, Girshick R, Sun J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv Neural Inf Process Syst. 2015;28:91-99.

- Ding S, Zhao K. Research on daily objects detection based on deep neural network. IOP Conf Ser: Mater Sci Eng. 2018;322(6):062024. doi:10.1088/1757-899X/322/6/062024.

CrossRef - Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: Unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016:779-788.

CrossRef - Qiao Y, Su D, Kong H, Sukkarieh S, Lomax S, Clark C. BiLSTM-based individual cattle identification for automated precision livestock farming. In: Proceedings of the IEEE 16th International Conference on Automation Science and Engineering (CASE). Hong Kong, China; 2020:967-972. doi:10.1109/CASE48 305. 2020.9217026.

CrossRef