Introduction

Convolutional Neural Networks (CNNs) have become a valuable resource for processing visual information and are applied to real tasks like object detection and classification, segmentation. The feature selection process in images is useful since most applications require visual perception of a spatial nature. CNNs learn the local features and spatial information effectively which is highly demanded for applications like pest detection. However, with millions of parameters, training CNNs from scratch poses challenges like overfitting and prolonged training durations that sometimes require high-performance hardware like TPUs.1 With the advanced AI technology and computer vision algorithms deep learning strategies are deployed to extract the features of similar pest species effectively as compared to the conventional HOG and scale invariant mechanisms which exhibited shallow learnings.2 Many machine learning and deep learning based approaches3 are available for pest detection and a type of semi-supervised learning is Transfer learning.4

Many practical applications in the field of agriculture ,5,6,7 medical imaging8 does not have access to large amounts of labelled datasets or even the computing power needed for training deep networks from scratch. This is particularly more pronounced in resource-constrained environments, such as small businesses or agricultural sectors, where the implementation of traditional CNNs becomes impractical.

Transfer learning thus acts as a good solution to these problems because it allows for the adaptation of a pre-trained model, such as those trained on ImageNet, to new tasks by using smaller datasets. Improvement in performance with less data and computing resources is also achieved through retaining the early layers, which extract general features, and fine-tuning9 the later layers for specific applications.10 A recent article11 has deployed transfer learning-based tomato disease classification method with optimal final tuning and has achieved significant results.

Transfer learning is implemented using Google’s EfficientNet architecture, which uses a novel compound scaling approach to scale model depth, width, and resolution simultaneously.12 The largest member of this architecture family, EfficientNetB7, achieves leading-edge accuracy at minimal computational cost. The authors have suggested a novel compound scaling technique to increase the accuracy and efficiency of base CNNs. After an in-depth analysis on model scaling, the authors conclude that optimizing network depth, breadth, and resolution is essential to enhance performance. They use this observation to develop a baseline network called EfficientNet and to scale up existing CNN based architectures like MobileNets and ResNets for image classification tasks. They also introduce a straightforward yet efficient compound scaling method that uniformly scales all three dimensions using a set of fixed scaling coefficients. Showcasing the significance of balancing network dimensions for building accurate and efficient ConvNets, the scaled EfficientNet models perform significantly better than other CNN based models on the ImageNet classification task. The authors go one step further and employ neural architecture search to create a baseline network, which is then scaled to generate the family of EfficientNet models. They highlight that success of their scaling strategy greatly depends on the baseline network. The architecture also possesses an outstanding transfer learning ability, required to work with lesser number of images captured on the agricultural fields.

A balanced scaling approach lets it effectively capture intricate patterns in images without excessive resource utilization, making it suitable for either large-scale or resource-constrained tasks.13

With applications ranging from agriculture to health14,15 EfficientNetB7 and transfer learning approach16 has impacted several sectors quite impressively. For example, within the agricultural sector, its primary uses have been crop disease detection and pest classification wherein labelling datasets are often limited or even highly specialized.17 The models it offers allow for precise yet efficient detection of pests, a long-term challenge in precision agriculture.

This work aims to explore the feasibility of using EfficientNetB7 for real-time agricultural pest detection, specifically its computational efficiency and accuracy. The proposed system would combine EfficientNetB7 with STM32 microcontrollers in a cost-effective and scalable way to identify pests, constituting an important step toward bringing modern machine learning techniques to agricultural automation.

Deep CNNs for image-based plant disease detection is used over more conventional ones because deep learning has made significant strides in computer vision. Using a dataset of 54,306 photos of 26 illnesses and 14 crop species from the PlantVillage project, the scientists trained a deep network to achieve relatively higher accuracy on a test set. This indicates that using a smartphone agricultural diseases can be diagnosed and treated from anywhere in the world. Even though the training requires a lot of processing power, the classification can be completed easily on a smartphone, which makes it suitable for mass use of the accessible application. The authors have also given the shortcomings of the existing approach such as its validation in real-field environments.

SVM based pest identification is performed by the author Ebrahimi in his manuscript where the error rate is minimized by enhancing the color intensity of the features.18 The incorporation of augmentation techniques by the author Kusrini in classification of pests in mango farms is found to improve the accuracy metrics significantly.19

Another work described the potential use of EfficientNet architecture’s transfer learning approach along with an attention mechanism such as Grad CAM ++20 has shown an enhanced performance in identifying the image based nutrient deficiencies in plants with an accuracy of 98.65%.

The EfficientNet B7 architecture was selected because it outperformed other cutting-edge deep learning models. To increase the classification accuracy, the framework investigated the impacts of six distinct optimization algorithms: SGD, RMSProp, Adagrad, Adam, Adadelta, and Nadam. Utilizing a softmax classifier and the Nesterov-accelerated adaptive moment estimation (Nadam) optimizer,21 the suggested framework produced the best results, achieving average F1-scores of 98%, average recall of 97.3%, average precision of 99.3%, and test accuracy of 99%.

ImageNet Large Scale Visual Recognition Challenge serves as a crucial benchmark for visual recognition in the field of computer vision. This challenge promotes progress in deep learning and image classification by offering a massive dataset with over 14 million labeled images spanning 20,000 categories. The methodologies and outcomes of various competing algorithms, highlighting the transition from conventional machine learning approaches to deep convolutional neural networks (CNNs) depicts a significant performance enhancement image based applications. Many research works emphasize the importance of both data quality and quantity in model training, showcasing that deeper network, like AlexNet, can achieve superior accuracy. Overall, the ImageNet challenge has stimulated research and innovation in visual recognition, resulting in significant advancements across diverse applications.

With the above studies and surveys the methods and techniques are carefully selected for performing pest classification with lesser number of images and in resource constrained environment.

Materials and Methods

Hardware Requirements

Camera ov2640 : The camera module sensor is of type CMOS. To analyze the performance of the model, it should be validated on low resolution images and hence a basic camera with a Resolution of 2 Megapixels (1600×1200)- is used. The camera uses serial interface: ad the output format supported includes YUV, JPEG, RGB with a 30 fps frame rate.

STM32 Nucleo-L476RG Microcontroller: The Core includes ARM Cortex-M4- microcontroller with 80 MHz frequency and a limited memory of 128KB SRAM. This controller supports lesser operating voltage of 3.3V and Interfaces such as USART, SPI, I2C.Effective port and low power modes are the highlights of the controller to be used as edge devices in real field environment. This controller supports the conversion of TensorFlow and Keras framework based model development in TFLite and TFLite Micro formats.STM32Cube IDE is used to develop and compile the firmware codes.

Dataset

Kaggle platform hosts many publicly available pest image datasets.IP102 is a publicly available image dataset used widely for agriculture based classification applications with 102 pest classes and 75K images. This is a class imbalanced dataset where the number of images across each class is different. This resourceful dataset is used in this study as the images are with various resolution, brightness, intensity with more similar visuals of pest species. This proposed work focusses only on 7 classes of Pests of IP102 dataset which includes Aphids, Armyworm, Beetle, Mites, Sawfly, Stemborer and Stemfly.

Implementation

CNN based algorithms and in specific, EfficientNet is frequently highlighted in literature surveys because of its exceptional performance on benchmark datasets, achieving high levels of accuracy while maintaining reduced computational demands. Its unique compound scaling approach allows for a balanced adjustment of depth, width, and resolution, making it versatile for various resource constraints, which is particularly advantageous for use in embedded systems such as STM32 microcontrollers. Furthermore, the architecture of EfficientNet is conducive to transfer learning, enabling researchers to harness pre-trained models to improve outcomes with smaller, specialized datasets. These characteristics make EfficientNet an attractive option for applications like pest detection, where both efficiency and accuracy are vital. The compound scaling technique of EfficientNetB7 is applied for pest classification. The model architecture adjusts depth, width, and resolution to balance performance and computational efficiency. The feature extraction in transfer learning approach is used where the early layers of EfficientNet trained on ImageNet is preserved, and only the final classification layer is fine-tuned for pest classification. The later layers are used with fine-tuning to adapt to the pest dataset but keep with the general feature extraction capability of the initial layers. The dataset used for pest classification was obtained from Kaggle. It includes 10,000 labeled images of pests of various classes such as aphids, whiteflies, caterpillars etc. The distribution of Images is such that the Training set includes 70% of Images (7,000 images) and Validation set includes 20% of Images (2,000 images) and the Test set accounts for 10% of images (1,000 images). All the Images are standardized to JPEG format and resized to 224×224 pixels to allow for compatibility with EfficientNetB7 input. By applying regularization and optimization techniques the model is trained and saved in .h5 format. The trained model using TensorFlow and Keras framework is converted to TensorFlowLite format for importing the model as an application on to the smartphone.

Preprocessing Data

Augmentation of Images

To enlarge the dataset and avoid overfitting, the following augmentation procedures were carried out on an Image Data Generator.

Random rotations, flips, and zooms.

Changes in brightness and contrast.

The transformation formula used:

x→augment(x)=x⋅T+ϵ (1)

Normalization

Pixel values were normalized to the range [0, 1] to normalize the input for the EfficientNetB7 model.

Training Method

Adam optimizer with the learning rate of 0.001. Binary cross-entropy loss function is used, and it is given as

L(y,y^)=−[y⋅log(y^)+(1−y)⋅log(1−y^)] (2)

The Batch Size is32 images per batch. The model is trained for 50 epochs for convergence

TensorFlow and Keras libraries is used and the learning rate is fixed as 0.5.

Performance Metrics

The following metrics were used to evaluate the performance of the model:

Accuracy: The number of correctly classified images.

Precision: The number of true positives among the predicted positives.

Recall: The number of true positives among the actual positives.

F1-Score: The harmonic mean of precision and recall.

Confusion Matrix: To get a detailed analysis of the classification performance.

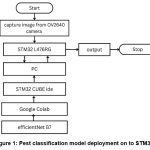

The system performed real-time classification using data streamed from the OV2640 camera module interface to STM32 controller. Flow chart of the model deployment on to STM32 is shown in Fig.1

|

Figure 1 Pest classification model deployment on to STM32. |

Results

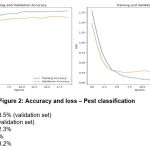

By combining the lightweight, power-efficient STM32 microcontroller with the EfficientNetB7 architecture, the system showed optimal balance between computational efficiency and accuracy. The project utilized the Kaggle dataset publicly available for training and validation. The model is trained for 20 epochs, and the results were evaluated on training and validation datasets. Key performance metrics such as accuracy, loss, precision, recall, and F1-score were computed to measure the efficiency of the classification. Figure 2 shows the model performance while classifying pests

|

Figure 2: Accuracy and loss – Pest classification |

Discussion

The graph in Fig. 2 also depicts a sharp rise in the training and validation accuracy within the first 5 epochs, stabilizing at around 93% after epoch 15. Validation loss decreased tenfold from the initial value and reached about 0.47 by the end of training. The yaxis has normalized data representation and so the scale is different between loss values and epochs. Initial loss values were about 1.75 and decreased exponentially.

An overall accuracy of 93.5% is achieved. Results were strong under a range of lighting conditions at dataset preparation time, thus further emphasizing the transfer learning method used in EfficientNetB7.

The study’s results surpass conventional pest detection models like SVM and ResNet50, which typically achieve validation accuracies of 80-85% on similar datasets. The compound scaling strategy employed in EfficientNetB7 enables superior accuracy with reduced computational overhead.

Challenges

Diversity

The dataset included limited pest species, potentially affecting real-world performance in diverse agricultural settings. Expanding the dataset could address this limitation.

Environmental Conditions

The variability in illumination and weather could degrade the accuracy of such a model while being in field deployment. Augmentations to models would have to be tailored based on specific domains when these kinds of variabilities exist.

STM32 – Inference Model Efficiency

STM32 runs inference efficiently and real time. Latencies were near minimal and showed up good promise in edge oriented applications. Still, computational strength at such microcontrollers means this might be not scalable towards large Dataset models.

Conclusion

This study successfully implemented an efficient, lightweight pest detection system combining the EfficientNetB7 model and STM32 microcontroller. The system is real-time, cost-effective, and accurate, allowing for support in precision agriculture through reducing dependence on poisonous pesticides and empowering farmers to react to infestations sooner.

Future Directions

Extending the dataset to include more diversity of pests and environmental conditions.

Exploring edge AI solutions to enable full localized decision-making processes.

Deep exploration of the deployment of hybrid architectures: efficiently combining EfficientNetB7 with more compact models for improved scalability and performance.

Acknowledgement

The authors would like to express their gratitude to the Kumaraguru College of Technology for providing the necessary infrastructure and support to carry out this research work. They also acknowledge the valuable contributions of the Department of Electrical and Electronics Engineering in facilitating this project.

The authors are thankful to the Kaggle community for making the pest image dataset publicly available, which was instrumental in the development and evaluation of the proposed pest detection system.

Funding Sources

The author(s) received no financial support for the research, authorship, and/or publication of this article.

Conflict of Interest

The authors do not have any conflict of interest.

Data availability

This statement does not apply to this article.

Ethics Statement

This research did not involve human participants, animal subjects, or any material that requires ethical approval”.

Authors contribution

Sandhya Devi Ramiah Subburaj: Conceptualization, Methodology, Writing – Original Draft.

Cowshik Eswaramoorthy: Data Collection, Analysis, Writing – Review & Editing.

Vishnu Gunasekaran Latha: Visualization, Supervision, Project Administration.

Rakshan Kaarthi Palanisamy Chinnasamy: Resources, Supervision.

References

- Russakovsky Olga, Deng Jia, Su Hao, Krause Jonathan, Satheesh Sanjeev, Ma Sean, Huang Zhiheng, Karpathy Andrej, Khosla Aditya, Bernstein Michael, Berg Alexander C. Imagenet large scale visual recognition challenge. International Journal of Computer Vision. 2015;115(2):211-252.

CrossRef - Russell Kathleen N, Do Minh T, Platnick Norman I. Introducing SPIDA-web: an automated identification system for biological species. Proceedings of Taxonomic Database Working Group Annual Meeting. 2005;1(1):11-18.

- Ding J, Yue C, Wang C, Liu W, Zhang L, Chen B, Shen S, Piao Y, Zhang L. Machine learning method for the cellular phenotyping of nasal polyps from multicentre tissue scans. Expert Review of Clinical Immunology. 2023;19(8):1023-1028.

CrossRef - Sandhya Devi Ramiah Subburaj, Vijay Kumar Vaithiyam Rengarajan, and Sivakumar Palanisamy “A review of image classification and object detection on machine learning and deep learning techniques.” In 2021 5th International Conference on Electronics, Communication and Aerospace Technology (ICECA), pp. 1-8. IEEE, 2021.

CrossRef - Rehman Asad, Dhaya Ranganathan, Ampatzidis Yiannis, Taylor Glenn. Automatic detection of weeds: Synergy between EfficientNet and transfer learning. 2021;2(1):45-67.

- Xie Jian, Vien Quoc-Tuan, Sellahewa Harin. Efficient pest classification in smart agriculture using transfer learning. EAI Endorsed Transactions on Industrial Networks and Intelligent Systems. 2021;8(26):1-10.

CrossRef - Wang Jian, Zhang Xiaoming, Li Min. TFEMRNet: A Two-Stage Multi-Feature Fusion Model for Efficient Small Pest Detection on Edge Platforms. 2024;6(4):4688-4703.

CrossRef - Khalil Muhammad, Tehsin Samabia, Humayun Mamoona, Jhanjhi Noor, AlZain Mohammed. Multi-Scale Network for Thoracic Organs Segmentation. Computers, Materials and Continua. 2022;70(3):3251-3265.

CrossRef - Wang Xin, Sun Jie, Zhang Zhen, Jiang Yue. Exploring multiple optimization algorithms in transfer learning with EfficientNet models for agricultural pest classification. CTU Journal of Innovation and Sustainable Development. 2024;16(2):35-41.

CrossRef - Pan Sinno Jialin, Yang Qiang. A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering. 2009;22(10):1345-1359.

CrossRef - Ramıah Subburaj Sandhya Devi, Vijayakumar Vaıthyam Rengarajan, and Sivakumar Palanıswamy. “Transfer Learning based Image Classification of Diseased Tomato Leaves with Optimal Fine-Tuning combined with Heat Map Visualization.” Journal of Agricultural Sciences 29(4) (2023): 1003-1017.

- Tan Mingxing. Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv preprint. 2019;1905(1):11946.

- Ghosh Animesh, Soni Bhawna, Baruah Udayan. Transfer Learning-Based Deep Feature Extraction Framework Using Fine-Tuned EfficientNet B7 for Multiclass Brain Tumor Classification. Arabian Journal for Science and Engineering. 2023;48(4):1201-1212.

CrossRef - Yosinski Jason, Clune Jeff, Bengio Yoshua, Lipson Hod. How transferable are features in deep neural networks? Advances in Neural Information Processing Systems. 2014;27(1):3320-3332.

- Raghu Maithra, Zhang Chiyuan, Kleinberg Jon, Bengio Samy. Transfusion: Understanding transfer learning for medical imaging. Advances in Neural Information Processing Systems. 2019;32(1):3345-3356.

- HS Sushma, Sooda Kavya, Karunakara Rai B. EfficientNet-B7 framework for anomaly detection in mammogram images. Multimedia Tools and Applications. 2024;83(4):7681-7702.

- Maican Elena, Iosif Alina, Maican Stefan. Precision corn pest detection: Two-step transfer learning for beetles (Coleoptera) with MobileNet-SSD. 2023;13(12):2287-2299.

CrossRef - Ebrahimi Mohammad Ali, Khoshtaghaza Mohammad Hassan, Minaei Sayed, Jamshidi Behzad. Vision-based pest detection based on SVM classification method. Computers and Electronics in Agriculture. 2017;137(1):52-58.

CrossRef - Kusrini Komang, Suputa Suyasa, Setyanto Agus, Agastya I Made Adi, Priantoro Heru, Chandramouli Krishnamurthy, Izquierdo Ebrou. Data augmentation for automated pest classification in Mango farms. Computers and Electronics in Agriculture. 2020;179(1):105842.

CrossRef - Espejo-Garcia Borja, Malounas Ioannis, Mylonas Nikolas, Kasimati Athena, Fountas Spyros. Using EfficientNet and transfer learning for image-based diagnosis of nutrient deficiencies. Computers and Electronics in Agriculture. 2022;196(1):106868.

CrossRef - Mohanty Sharada Prasad, Hughes David P, Salathé Marcel. Using deep learning for image-based plant disease detection. Frontiers in Plant Science. 2016;7(1):1419.

CrossRef